ICDAR2017 Competition on Layout Analysis for Challenging Medieval Manuscripts

Important dates

Introduction

Motivation

Objective

Performance measures

Evaluation tool

Description of the dataset

CSG18 & CSG863

CB55

Result file format

Task 1

Task 2

Task 3

Registration

How to submit

Organisers

Bibliography

News

July 18th: The private test set for all Tasks is now available for download.

June 28th: New Evaluation tool for Task 1 is now available as open source on GitHub.

June 28th: New pixel-labeled ground truth files for Task 1 are available for download.

June 15th: System submission and competition registration deadline extended until the 25th of June, Midnight CET.

June 7th: How to submit instructions are now available.

March 15th: Evaluation tool for Task 3 is now available as open source on GitHub.

February 24th: Evaluation tools for Task 1 and Task 2 are now available as open source on GitHub.

February 14th: Ground truth files for Task 1 and Task 2 are available for download.

February 2nd: New pixel-labeled ground truth files for Task 1 are available for download.

January 28th: Result file format for Task 1 is now available.

January 28th: Registration is now open !

January 25th: Training and validation sets for Task 1 are available for download.

Important dates

Registration deadline: June 18th

Submission deadline: June 18th

Introduction

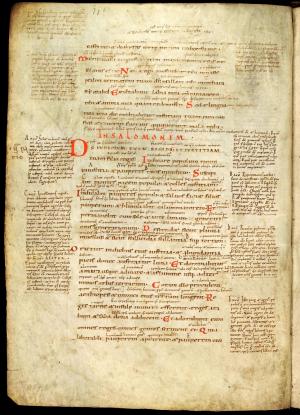

We invite all researchers and developers in the field of document layout analysis to register and participate in the new Competition on Layout Analysis for Challenging Medieval Manuscripts (HisDoc-Layout-Comp). We introduce a new challenging dataset of medieval manuscripts with complex layout, as it is described in [1]. The complexity of the layout lies on the fact that in all manuscripts the main text body is entwined with marginal and/or interlinear glosses, additions, and corrections. Glosses are brief explanatory notes located in the margin of a page (marginal gloss) or between lines of the main text (interlinear gloss). Beyond the task of the competition - i.e., the accurate layout analysis of the manuscripts - the competition organizers want to introduce a new way of managing the participants’ algorithms: we plan to use DIVAServices [2, 3] for submission and evaluation.

We plan to make the submitted methods available on DIVAServices after the competition; however, participants may opt out of the publication of their method on DIVAServices. Therefore will shall provide three means of participating:

- published on DIVAServices and open source,

- published on DIVAServices but closed source,

- not published on DIVAServices.

We propose three tasks for this competition:

- Task 1 - Layout analysis

- Task 2 - Baseline detection

- Task 3 - Textline segmentation

Motivation

Manuscripts can be preserved and more efficiently processed when digitised. We observe an increasing demand for automatic processing methods. Many of the historical manuscripts have complex layout and thus induce the need for more challenging algorithms than the state-of-the-art.

Moreover we think that algorithms should be available to the community, the main motivation behind our DIVAServices. Therefore, we encourage all participants to make their algorithms available through DIVAServices - the evaluation tool will also be available online in order to allow future methods to be compared directly to the competition results.

Objective

The objective of this competition is to allow researchers and practitioners from academia and industries to compare their performance in layout analysis tasks on a new dataset of challenging medieval manuscripts.This will assist computer science society to establish a benchmark on the performance of such methods and the humanists community to easier and quicker study manuscripts.

Performance measures

For Task 1 and Task 3 (layout analysis and textline segmentation), the accuracy of the class-wise pixel classification results will be calculated by dividing the number of correct classifications (True Positives + True Negatives) via the total number of pixels. Our ground truth is based on polygons, however we run the evaluation at pixel-level. Pixels which are inside of a polygon are considered as a special case:

- If they belong to the foreground, then they must be classified as belonging to the same class as the polygon.

- If they belong to the background, we call them boundary pixels, and we accept them to be classified either as belonging to the same class as the polygon, or as background.

Furthermore, in our proposed GT a pixel can have more than one label, belonging to more than one classes (main text body, decorations and comments) except for the background class. For example a pixel can be a part of the main text body but at the same time can also be a part of the decoration.

The performance will be measured in terms of mean IU as proposed in [4], with the extension of multi-labeling.

For Task 2 (baseline detection) we will use a new performance measure. This measure will be based on finding the start and end points of the baseline of each textline. The quality of the segmentation will be evaluated according to https://github.com/Transkribus/TranskribusBaseLineMetricTool . The ground truth baselines are extracted using the tight polygons from the layout ground truth and a binarization method. The procedure is as follows:

- the document image is binarized

- for each text polygon, we get the list of lower contour points

- we use the least square methods to find a baseline through these points

- we compute the median distance between the points and the line, and discard the points which are farther than 50% of the median distance (outliers)

- we compute the baseline of the remaining points (inliers)

There is a second competition on baseline detection (cBAD).

Evaluation tool

Task 1: https://github.com/DIVA-DIA/LayoutAnalysisEvaluator

Task 2: https://github.com/Transkribus/TranskribusBaseLineMetricTool

A detailed description is available at http://arxiv.org/abs/1705.03311.

Task 3: https://github.com/DIVA-DIA/LineSegmentationEvaluator.

Description of the Data Set

DIVA-HisDB is a collection of three medieval manuscripts that have been chosen regarding the complexity of their layout, together with partners from e-codices and the Humanities faculty in the University of Fribourg:

- St. Gallen, Stiftsbibliothek, Cod. Sang. 18, codicological unit 4 (CSG18),

- St. Gallen, Stiftsbibliothek, Cod. Sang. 863 (CSG863),

- Cologny-Geneve, Fondation Martin Bodmer, Cod. Bodmer 55 (CB55).

For annotating the three selected medieval manuscripts we use GraphManuscribble [6], a semi-automatic tool which is based on document graphs and pen-based scribbling interaction.

The database consists of 150 pages in total. In particular:

-

30 pages/manuscript for training

- including 10 public-test pages

- 10 pages/manuscript for validation and

- 10 pages/manuscript for testing.

The public-test, training and validation sets for both Tasks are already available for download but the test set will not be revealed until after the competition.

The images are in JPG format, scanned at 600 dpi, RGB color. The ground truth of the database is available as:

- pixel-label images and

- PAGE [7]

There are four different annotated classes which might overlap: main text body, decorations, comments and background. In the pixel-label images the classes are encoded by RGB values as follows:

- RGB=0b00...1000=0x000008: main text body

- RGB=0b00...0100=0x000004: decoration

- RGB=0b00...0010=0x000002: comment

- RGB=0b00...0001=0x000001: background (out of page)

Note that the GT might contain multi-class labeled pixels, for all classes except for the background. For example:

main text body+comment : 0b...1000 | 0b...0010 = 0b...1010 = 0x00000A

main text body+decoration : 0b...1000 | 0b...0100 = 0b...1100 = 0x00000C

comment +decoration : 0b...0010 | 0b...0100 = 0b...0110 = 0x000006

For the boundary pixel, we use the RGB value:

RGB=0b10...0000=0x800000 : boundary pixel (to be combined with one of the classe, expect background)

For example a boundary comment is represented as:

boundary+comment=0b10...0000|0b00...0010=0b10...0010=0x800002

CSG18 & CSG863

Total set: 50 pages/each

Description: dating from the 11th century, written in Latin language using Carolingian minuscule script. The number of writers in these two manuscripts is unspecified.

CB55

Total set: 50 pages

Description: dating from the 14th century, written in Italian and Latin language using chancery script. There is only one writer in this manuscript.

Result file format

Task 1

The submitted algorithm should take two inputs: the test image path with the extention .jpg (e.g. image1.jpg) and the output file path with the extention .png. The algorithm should generate as an output a pixel-label image with the extention .png (e.g. image1_output.png).

Example call:

your_layout_analysis.sh INPUT_PATH/image1.jpg OUTPUT_PATH/image1_output.png

In the pixel-label images the classes should encoded by RGB values as follows:

RGB=0b00...1000=0x000008: main text body

RGB=0b00...0100=0x000004: decoration

RGB=0b00...0010=0x000002: comment

RGB=0b00...0001=0x000001: background and out of page

Note that in the case of multi-class labeled pixel its value will be the addition of the corresponding classes.

For example:

main text body+comment : 0b...1000 | 0b...0010 = 0b...1010 = 0x00000A

main text body+decoration : 0b...1000 | 0b...0100 = 0b...1100 = 0x00000C

comment +decoration : 0b...0010 | 0b...0100 = 0b...0110 = 0x000006

Pay attention that the backgound class cannot be merged with other classes!

Task 2

The submitted algorithm should take three inputs: the test image path with the extention .jpg (e.g. image1.jpg), the test PAGE XML path with the extention .xml (e.g. image1.xml) and the output file path with the extention .xml. The algorithm should generate as an output a PAGE XML file (e.g. image1_output.xml).

Example call:

your_baseline_detection.sh INPUT_PATH/image1.jpg INPUT_PATH/image1.xml OUTPUT_PATH/image1_output.xml

The scope of the algorithm is to detect the baselines inside a defined TextRegion.

Task 3

The submitted algorithm should take three inputs: the test image path with the extention .jpg (e.g. image1.jpg), the test PAGE XML path with the extention .xml (e.g. image1.xml) and the output file path with the extention .xml. The algorithm should generate as an output a PAGE XML file (e.g. image1_output.xml).

Example call:

your_textline_segmentation.sh INPUT_PATH/image1.jpg INPUT_PATH/image1.xml OUTPUT_PATH/image1_output.xml

The scope of the algorithm is to detect the textlines of the main text body.

Registration

In order to participate in this competition, you need to send an email to:

Dr. Fotini Simistira - fotini.simistira@unifr.ch

providing the following information:

Name(s) of the participant(s): ..............................

Affiliation(s): ..............................

Contact details:

Contact person: ..............................

Email: ..............................

Which task you want to participate in: ........

Click here to send your registration by email

How to submit

Participants should send an executable file, a bash script, a virtual machine, or even a docker image (preferably). The methods should not expect specific hardware or software installations (e.g., machine learning libraries should be used in CPU-Option).

Binary-Submission

- All dependencies should be in the same directory or a subdirectory.

- Code should be compiled against Ubuntu-14.04 or 16.04 (64bit)

- Windows binaries are also possible

- Provide a Link for downloading the specific zip file

VirtualBox-Image

- Provide a VirtualBox-Image as download link

- Provide instructions how the method can be ran inside the VirtualBox

Docker-Image

- Provide the reference image name as hosted on docker hub (https://hub.docker.com)

- Provide instructions how the docker image can be executed

If you have other specific requirements or technical questions, contact Marcel Würsch.

Send a link to download either a zip-file including all dependencies, a virtual machine, or a docker image should be send to Fotini Simistira.

Organisers

Document Image and Voice Analysis (DIVA) Research Group

Department of Informatics

University of Fribourg, Boulevard de Pérolles 90, 1700 Fribourg, Switzerland

Contacts:

Fotini Simistira

Mathias Seuret

Marcel Würsch

Kai Chen

Manuel Bouillon

Michele Alberti

Angelika Garz

Marcus Liwicki

E-mail:

fotini.simistira@unifr.ch

Bibliography

- F. Simistira, M. Seuret, N. Eichenberger, A. Garz, M. Liwicki, R. Ingold. DIVA-HisDB: A Precisely Annotated Large Dataset of Challenging Medieval Manuscripts, International Conference on Frontiers in Handwriting Recognition (ICFHR2016), pp. 471-476, 2016. PDF

- M. Würsch, R. Ingold, M. Liwicki. SDK Reinvented: Document Image Analysis Methods as RESTful Web Services, International Workshop on Document Analysis Systems (DAS2016), p. 90-95, 2016. DOI: 10.1109/DAS.2016.56

- https://www.github.com/lunactic/DIVAServices

- J. Long, E. Shelhamer, E. Darrell. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431-3440, 2015. PDF

- U. V. Marti, H. Bunke. Using a statistical language model to improve the performance of an HMM-based cursive handwriting recognition system. International Journal of Pattern Recognition and Artificial intelligence 15.01 (2001): 65-90. DOI: 10.1142/S0218001401000848

- A. Garz, M. Seuret, F. Simistira, A. Fischer, R. Ingold. Creating Ground Truth for Historical Manuscripts with Document Graphs and Scribbling Interaction, International Workshop on Document Analysis Systems (DAS2016), p. 126-131, 2016. DOI: 10.1109/DAS.2016.29, PDF

-

S. Pletschacher, A. Antonacopoulos. The PAGE (page analysis and ground-truth elements) format framework. In 20th International Conference on Pattern Recognition (ICPR), 2010.

DOI: 10.1109/ICPR.2010.72